Reliability DRBD

In my last post, I showed that DRBD could be used diskless, which effectively does the same as exposing a disk with iSCSI. However, DRBD can do more than just become an iSCSI target, and its most known feature is replicating disks over a network.

This post will look into mounting a DRBD device diskless and testing its reliability when one of the two backing nodes fails and more.

I started by mounting the DRBD disk on node 3, the diskless node. If you run drbdadm status it should show the following:

root@drbd1:~# drbdadm status

test-disk role:Secondary

disk:UpToDate

drbd2 role:Secondary

peer-disk:UpToDate

drbd3 role:Primary

peer-disk:DisklessAfter it's mounted, I've created a small test file and installed pv. I started writing the test file slowly to the disk. For now, we don't want to overload the disk or fill it up too quickly to perform reliability tests.

I gave node one a shutdown command to test the reliability under normal circumstances. After it came back, I gave node two a shutdown command.

# Diskless DRBD node 3

root@drbd3:~# dd if=/dev/urandom bs=1M count=1 > /testfile

root@drbd3:~# cat /testfile | pv -L 40000 -r -p -e -s 1M > /mnt/testfile

[39.3KiB/s] [=====> ] 11% ETA 0:00:23

# DRBD node 1

root@drbd1:~# reboot

Connection to 192.168.178.199 closed by remote host.

Connection to 192.168.178.199 closed.

# DRBD node 2

root@drbd2:~# drbdadm status

test-disk role:Secondary

disk:UpToDate

drbd1 connection:Connecting

drbd3 role:Primary

peer-disk:Diskless

# DRBD node 1

root@drbd1:~# drbdadm adjust all

Marked additional 4096 KB as out-of-sync based on AL.

root@drbd1:~# drbdadm status

test-disk role:Secondary

disk:UpToDate

drbd2 role:Secondary

peer-disk:UpToDate

drbd3 role:Primary

peer-disk:Diskless

# Diskless DRBD node 3

root@drbd3:~# md5sum /testfile && md5sum /mnt/testfile

553118a49cea22b739c2cf43fa53ae86 /testfile

553118a49cea22b739c2cf43fa53ae86 /mnt/testfileDuring the reboot of DRBD node one, the writes on DRBD node three were halted shortly but came back very soon after.

When applying more pressure on the disks using a 3GB test file and unlimited speed, the disk of the rebooted server became inconsistent and needed a resync.

root@drbd2:~# reboot

Connection to 192.168.178.103 closed by remote host.

Connection to 192.168.178.103 closed.

root@DESKTOP-2RFLM66:~# ssh 192.168.178.103

root@drbd2:~# drbdadm status

# No currently configured DRBD found.

root@drbd2:~# drbdadm adjust all

root@drbd2:~# drbdadm status

test-disk role:Secondary

disk:Inconsistent

drbd1 role:Secondary

replication:SyncTarget peer-disk:UpToDate done:7.28

drbd3 role:Primary

peer-disk:Diskless resync-suspended:dependency

# Diskless DRBD node 3

root@drbd3:~# md5sum /testfile && md5sum /mnt/testfile

d67f12594b8f29c77fc37a1d81f6f981 /testfile

d67f12594b8f29c77fc37a1d81f6f981 /mnt/testfile

root@drbd3:~# md5sum /testfile && md5sum /mnt/testfile

d67f12594b8f29c77fc37a1d81f6f981 /testfile

d67f12594b8f29c77fc37a1d81f6f981 /mnt/testfile

root@drbd3:~# md5sum /testfile && md5sum /mnt/testfile

d67f12594b8f29c77fc37a1d81f6f981 /testfile

d67f12594b8f29c77fc37a1d81f6f981 /mnt/testfileSo DRBD seems to be very stable when the servers are rebooted gracefully. But what happens if we reboot them both?

root@drbd3:~# cat /testfile | pv -r -p -e -s 3000M > /mnt/testfile; md5sum /testfile && md5sum /mnt/testfile

[3.67MiB/s] [=======================================> ] 36% ETA 0:00:22

pv: write failed: Read-only file system

Message from syslogd@drbd3 at Feb 28 13:50:38 ...

kernel:[ 4498.824570] EXT4-fs (drbd1): failed to convert unwritten extents to written extents -- potential data loss! (inode 12, error -30)

Message from syslogd@drbd3 at Feb 28 13:50:38 ...

kernel:[ 4498.825393] EXT4-fs (drbd1): failed to convert unwritten extents to written extents -- potential data loss! (inode 12, error -30)

Message from syslogd@drbd3 at Feb 28 13:50:38 ...

kernel:[ 4498.826171] EXT4-fs (drbd1): failed to convert unwritten extents to written extents -- potential data loss! (inode 12, error -30)

Message from syslogd@drbd3 at Feb 28 13:50:38 ...

kernel:[ 4498.826876] EXT4-fs (drbd1): failed to convert unwritten extents to written extents -- potential data loss! (inode 12, error -30)

Message from syslogd@drbd3 at Feb 28 13:50:38 ...

kernel:[ 4498.827601] EXT4-fs (drbd1): failed to convert unwritten extents to written extents -- potential data loss! (inode 12, error -30)

Message from syslogd@drbd3 at Feb 28 13:50:38 ...

kernel:[ 4498.828365] EXT4-fs (drbd1): failed to convert unwritten extents to written extents -- potential data loss! (inode 12, error -30)

Message from syslogd@drbd3 at Feb 28 13:50:38 ...

kernel:[ 4498.829102] EXT4-fs (drbd1): failed to convert unwritten extents to written extents -- potential data loss! (inode 12, error -30)

d67f12594b8f29c77fc37a1d81f6f981 /testfile

md5sum: /mnt/testfile: Input/output error

root@drbd3:~# md5sum /testfile && md5sum /mnt/testfile

d67f12594b8f29c77fc37a1d81f6f981 /testfile

2f80ddfb7fe21b9294b2e3663c0a0644 /mnt/testfile

root@drbd3:~# mount | grep mnt

/dev/drbd1 on /mnt type ext4 (ro,relatime)It doesn't like that. But the disk seems to be OK to the point where it could write. Of course, you don't want this to happen, but at least the disks are still mountable and readable.

What if the network starts flapping?

root@drbd1:~# drbdadm status

test-disk role:Secondary

disk:UpToDate

drbd2 role:Secondary

peer-disk:UpToDate

drbd3 role:Primary

peer-disk:Diskless

... Connectivity failure due to tagging VM with wrong VLAN in Hyper-V

... Restoring VLAN settings

root@drbd1:~# drbdadm status

test-disk role:Secondary

disk:UpToDate

drbd2 connection:Connecting

drbd3 connection:Connecting

root@drbd1:~# drbdadm status

test-disk role:Secondary

disk:Inconsistent

drbd2 role:Secondary

replication:SyncTarget peer-disk:UpToDate done:5.39

drbd3 role:Primary

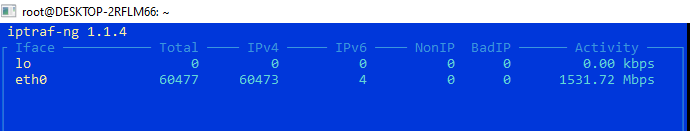

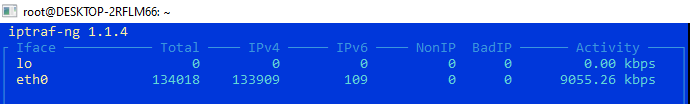

peer-disk:Diskless resync-suspended:dependencyWriting to the disk was just as fast as writing when both were available.

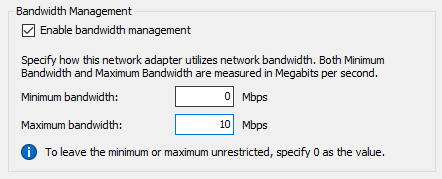

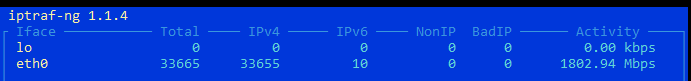

What if we have a broken network connection that allows 10Mbps?

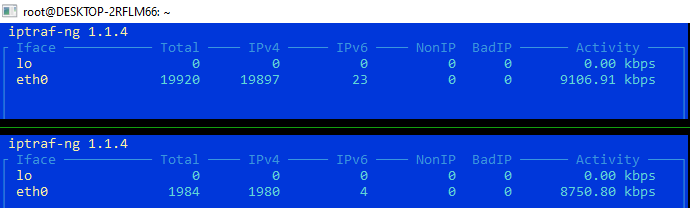

However, this only seems to work on outgoing traffic, not incoming traffic. While reading from the disk, both nodes are limited at 10Mbps if one of them is.

When setting the DRBD "test-disk" down on node 1, the speed of node 2 became unlimited again.

Interesting to see it balances the reads on both nodes.

What if a node gets panicked during writes?

Let's reset DRBD node two while writing at full speed.

root@drbd2:~# packet_write_wait: Connection to 192.168.178.103 port 22: Broken pipe

root@DESKTOP-2RFLM66:~# ssh 192.168.178.103

Last login: Mon Feb 28 14:32:18 2022 from 192.168.178.47

root@drbd2:~# drbdadm status

# No currently configured DRBD found.

root@drbd2:~# drbdadm adjust all

Marked additional 4948 MB as out-of-sync based on AL.

root@drbd2:~# drbdadm status

test-disk role:Secondary

disk:Inconsistent

drbd1 role:Secondary

replication:SyncTarget peer-disk:UpToDate done:0.21

drbd3 role:Primary

peer-disk:Diskless resync-suspended:dependencyBesides a short hiccup, we don't notice anything after DRBD declares node two unavailable.

Conclusion

DRBD has shown to be very stable. Rebooting or resetting DRBD nodes will result in a short hiccup but will continue to work just fine. I couldn't yet figure out why limiting one node its network bandwidth results in both nodes being limited in read-speed, and I'd like to see that being balanced based on the congestion of the network. In the next DRBD post, I hope to look at LINSTOR.