Isolating DevOps changes using ephemeral infra environments

This blog post describes DevOps' ephemeral environments, a way for DevOps to create safe environments to test changes to the cluster.

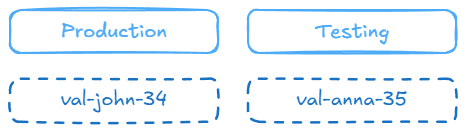

Most companies have a production and testing environment for development. Both are used daily by developers and users. Platform or DevOps engineers often also use these test environments, and their work can break that environment due to faulty changes. Some companies schedule these changes "outside of working hours." Which is horrible for the people working on those changes.

For the application developers, it's normal to create a branch, write some code, and push it to git to be presented with an ephemeral environment—an instance of the application made just in time for you.

In your application instance, you click around and make some API calls. All without interacting with the production environment because you're in the test environment. Your code is doing fine, but suddenly, API calls fail randomly or even stop responding altogether. After debugging for a few minutes, you figure out that someone in the DevOps team is also working on some changes, breaking the test cluster.

The Kubernetes ingress controller needs an update, and just like your own code, the changes don't always work immediately. Your colleagues next to you are all hitting the F5 button like there is no tomorrow. The Slack channel gets pinged: "Cluster down??"

This situation happens more than DevOps'ers like to admit. Although you're testing it on the test cluster, it is production to the company. The solution is easy: yet another cluster, right? Prod, test, validation? Like a DevOps test cluster? If a DevOps test cluster exists, will only one person work on stuff?

What if I told you there was an elegant solution?

The idea

Having DevOps' ephemeral environments means you can create and destroy clusters whenever you're working on something as a DevOps'er, just like the developers do. This creates a safe environment for you to experiment with and test changes to the infrastructure.

Test is a production cluster

When a cluster can't be deleted, it's production to someone, and that someone is affected when you kill it off or play around with it. This means that when the DevOps guy wrecks a test cluster that prevents anyone from working, you accidentally break a cluster that was production to you.

Hand-sculpting a beautifully unstable cluster

When creating ephemeral clusters, it is important to reimagine how your production clusters exist. These clusters shouldn't be hand-sculpted masterpieces of art; instead, they should be easily copied or reproduced like printouts. Adding pieces to your infrastructure piece by piece can cause your blueprint to become unstable. When the time comes for you to rebuild your testing or production cluster, it will no longer be possible to build it up without taking hours to figure out what is wrong. Even worse, you aren't used to creating new clusters.

Then there is ClickOps'ing and Ninja'ing, which create unpredictable behavior, something we don't want in production. There is no record of change, and nobody remembers what happened three days ago.

Therefore, it's essential to be able to spin up a replica of that production cluster whenever you need it and throw it away when you're heading home.

Cluster generation with PRs

When you start working on something—an update to a controller, for example—you should do this on a cluster that is as close to production as possible. Testing in production doesn't fly for infrastructure, so you need a copy. Let's take a look at the options:

- Production: Impacts customers

- Testing: Impacts developer colleagues

- Persistent validation: Impacts DevOps colleagues and is expensive

- Ephemeral validation: Impacts only you

Now, this isn't easily done. Converting your blueprint to roll out 2+n clusters will require changes. Your blueprints might also require manual steps like allocating external resources (active directory, cloud resources, names, etc.). However, the biggest problem you will probably have is reproducibility.

The most important aspect of using ephemeral cluster generation is having a reproducible cluster. Whenever a PR is opened for infra, the environment should be built up and destroyed automatically when it is closed. Manual interventions make starting up a new cluster annoying and should be avoided entirely. You don't want to write a guide on how this mechanism works because of the shortcuts you took. It should work without explanation.

Cluster upgrade testing

Having the ability to generate clusters brings another great feature. Upgrade tests mean that you're running a pipeline on your ephemeral environment, which:

- Destroys your cluster completely

- Builds it back up from the main branch

- Runs the test suite of the main branch to verify it's working correctly

- Starts the upgrades of the cluster to your branch in one go

- Runs the test suite of your branch

Using such a workflow ensures that your changes will work on the clusters currently running the main branch.

The most obvious scenario for this is updating the cluster, whether it's something like Crossplane or Kubernetes itself.

Example scenario

You could run into dependency constraints whenever you're building a new feature. For example, Crossplane creates its CRDs, not through ArgoCD reading the Helm chart. This can work when you're slowly building your change:

- Add Crossplane to your setup

- Crossplane creates the Provider CRD

- You add the S3 AWS provider

But what if you merge this as one run into production?

- ArgoCD adds Crossplane and the S3 AWS Provider to your setup

- ArgoCD fails to dry-run Helm because it doesn't know the Provider CRD

By running an upgrade test, you would have found this flaw early.

This is one of the most confidence-creating features of having DevOps' ephemeral environments.

Cost control

Running additional environments isn't free. When you're working on something, a complete extra cluster will be created, and you'll pay for the resources you use. It's essential to keep track of the costs of these environments.

Track costs

If you're in AWS or any other cloud that uses the pay-per-minute model, you can put the resources in a separate group. This can be done using tags or separate accounts (which is also nice for security). Set budgets and notifications when running over your budget to ensure you don't run into a $10.000 bill just for some runaway controller creating hundreds of RDS databases.

Shut down the environment

The ability to start environments whenever you want also allows you to delete environments you're not currently working on. For example, when you're heading home every noon, you can shut down the environment to save costs. It takes time for your environment to become ready, so you will probably not shut it down for a bathroom break.

This also allows you to keep testing if your new changes will break cluster generation due to a circular dependency.

Disable features

Some things are outside the cluster's operational scope. For example, our audit logs in CloudWatch made up half of the costs of our validation account.

You can also run all nodes on spot, which is good practice for your testing and production environment, too. It's free chaos engineering, but make sure the application keeps working. All of our nodes have a 24-hour expiration date, which continuously tests node disruptions.

Of course, you can do a lot more but prevent drifting away too much from production by using a different blueprint for validation.

Nuke it

Good practice can also be to clean up everything in your account. I use aws-nuke for this. Every Friday evening, resources not explicitly excluded from the nuking process are removed from the AWS account. This ensures you don't have leftover resources from experiments.

Conclusion

This is one of the most important technical things I've learned at my job last year at Alliander. They have this flow in place, and it works great. Now, I've implemented this flow at the company where I'm currently working, Viya, and I'm 100% in on this. I'll probably be writing about some technical stuff related to this workflow in the future.